| R. Kenny Jones1 Paul Guerrero2 Niloy J. Mitra2,3 Daniel Ritchie1 |

| 1Brown University 2Adobe Research 3University College London |

@article{jones2023ShapeCoder,

author = {Jones, R. Kenny and Guerrero, Paul and Mitra, Niloy J. and Ritchie, Daniel},

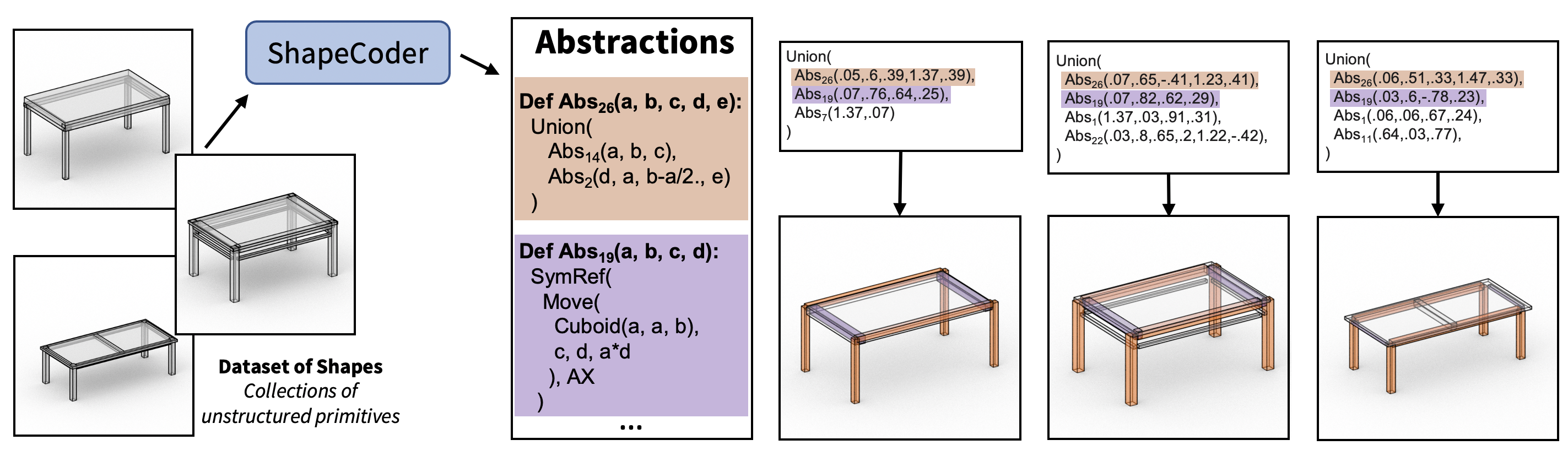

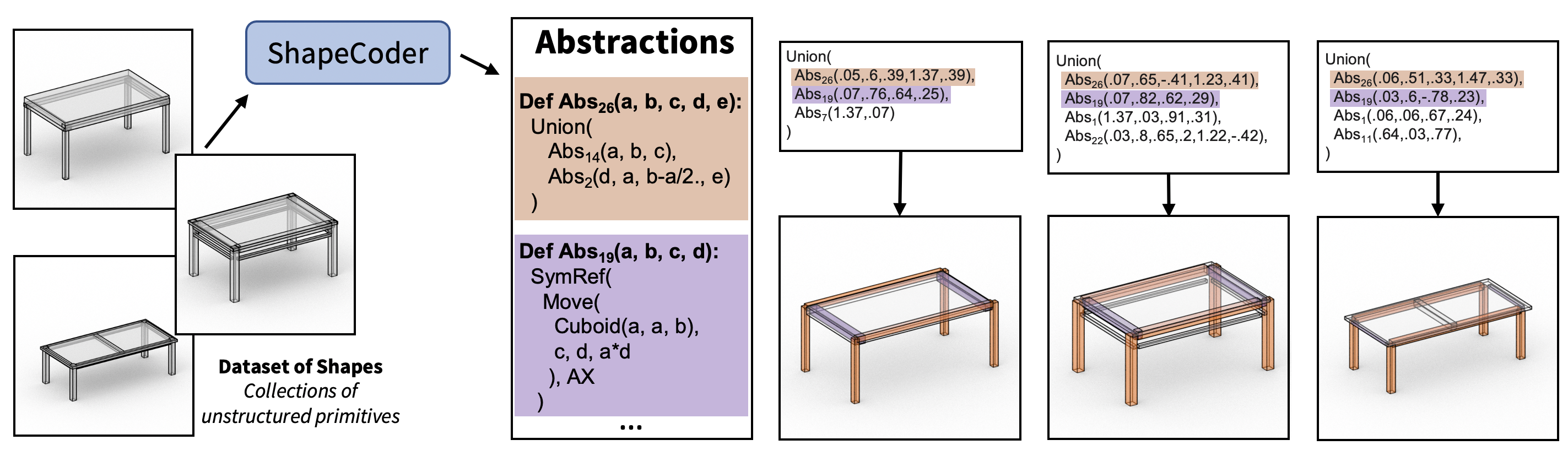

title = {ShapeCoder: Discovering Abstractions for Visual Programs from Unstructured Primitives},

year = {2023},

issue_date = {August 2023},

address = {New York, NY, USA},

volume = {42},

number = {4},

journal={ACM Transactions on Graphics (TOG), Siggraph 2023},

articleno = {49},

}